Think of High Bandwidth Memory (HBM) not just as RAM, but as the exclusive, high-octane fuel for the world’s most powerful computer chips. It’s the super-fast memory that powers the GPUs training ChatGPT, creating stunning visual effects, and driving the next wave of scientific discovery.

In today’s AI-driven world, the raw power of a processor is only half the story. The real bottleneck is how quickly that chip can access the enormous amounts of data it needs to work. This is where HBM changes the game entirely.

As a technology analyst covering the AI and semiconductor industries, I’ve seen the term HBM go from a niche engineering spec to a headline-making component. Understanding what is High Bandwidth Memory is no longer just for hardware engineers; it’s essential for anyone who wants to grasp the technology shaping our future.

In this comprehensive guide, you’ll discover:

- What HBM is, using a simple “skyscraper vs. townhouses” analogy.

- The critical difference in the HBM vs. GDDR debate.

- Why is HBM so important for AI and high-performance computing?

- The evolution of the technology, from HBM2 to High Bandwidth Memory 3 (HBM3).

Don’t let complex hardware terms be a barrier to understanding the future. Let me demystify the technology that’s fueling the AI revolution.

1. What is High Bandwidth Memory?

For decades, computer memory followed a simple rule: If you wanted more capacity or performance, you had to build outwards, taking up more physical space. High Bandwidth Memory (HBM) completely rewrites that rulebook. To understand this revolution, let’s use a simple real estate analogy.

1.1. Traditional DDR/GDDR memory

Think of the regular DDR RAM in your PC or the GDDR memory on a gaming graphics card as a long, sprawling row of townhouses.

To increase your living space (memory capacity), you have to build more houses along the street, taking up a lot of valuable real estate (physical space on the circuit board). Each house has a relatively narrow front door. This “front door” is the memory bus, the electronic pathway for data. While you can make the furniture (data) move through it very quickly, you can only move one or two pieces at a time. The result is a system that’s fast, but fundamentally limited by its narrow access points.

1.2. High Bandwidth Memory (HBM)

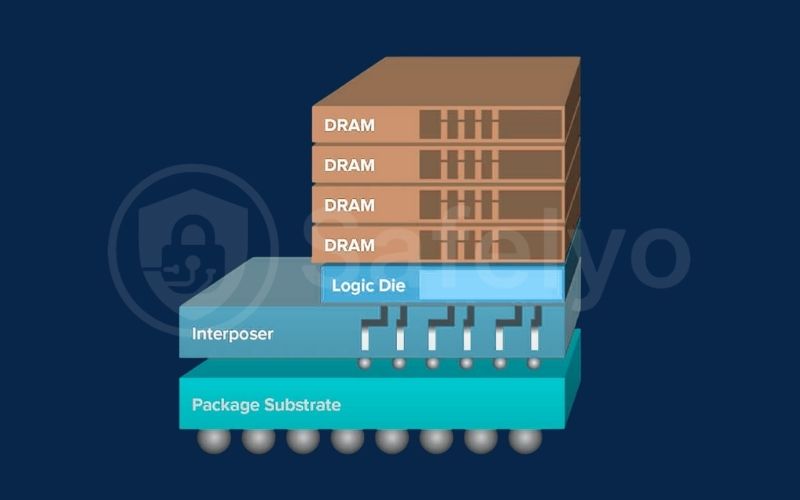

So, what is High Bandwidth Memory? It’s a revolutionary approach to architecture. Instead of building outwards, HBM builds upwards. It is a form of stacked DRAM, where multiple memory chips (DRAM dies) are stacked vertically on top of each other, creating a compact, hyper-dense “skyscraper” of memory.

This architectural shift creates two game-changing advantages:

- A Smaller Footprint: The High Bandwidth Memory “skyscraper” takes up far less physical “land” on the circuit board than the sprawling townhouses of GDDR. This allows engineers to place the memory much closer to the main processor (the GPU), reducing the distance data has to travel.

- A Super-Wide ‘Elevator’: This is the most important difference. Instead of a narrow front door, the skyscraper has a massive, super-wide service elevator that connects directly to all floors at once. This elevator is the ultra-wide memory bus (often 1024 bits or wider, compared to GDDR’s 192-bit or 384-bit bus). Its ability to move an enormous amount of furniture (data) in and out simultaneously is what creates the massive memory bandwidth.

This incredible vertical connection isn’t magic. It’s made possible by thousands of microscopic, high-tech channels called through-silicon vias (TSVs) that act like the structural pillars and elevator shafts running directly through the stacked silicon chips. Here’s how the two philosophies stack up at a glance:

| Feature | HBM (Skyscraper) | GDDR (Townhouses) |

| Structure | Vertical (3D-Stacked) | Horizontal (2D Planar) |

| Bandwidth | Extremely High | High |

| Power Use | Lower | Higher |

| Physical Size | Smaller | Larger |

| Cost | Very High | Moderate |

2. HBM vs. GDDR: The showdown

Now that we have the “Skyscraper vs. Townhouses” analogy in mind, let’s put HBM vs. GDDR head-to-head in a more detailed technical comparison. As a tech analyst, this is the breakdown I use to explain to clients why these two memory types, while both fast, are built for completely different worlds.

| Feature | HBM (High Bandwidth Memory) | GDDR (Graphics Double Data Rate) |

| Architecture | 3D-Stacked. Multiple DRAM dies are stacked vertically, connected by TSVs. | 2D Planar. Memory chips are placed side-by-side on the circuit board. |

| Memory Bus Width | Ultra-Wide (1024-bit or wider). A massive, multi-lane superhighway for data. | Narrow (128-bit to 384-bit). A fast but narrower road. |

| Bandwidth | Extremely High. Capable of multiple terabytes per second (TB/s). | High. Capable of hundreds of gigabytes per second (GB/s). |

| Power Consumption | Lower. The short, direct vertical connections are more power-efficient. | Higher. Pushing data at high clock speeds over longer distances uses more power. |

| Physical Size (Footprint) | Much Smaller. The vertical stacking saves a huge amount of space on the chip. | Larger. The sprawling layout takes up significant real estate. |

| Cost | Very High. The complex manufacturing and stacking process is expensive. | Moderate. A mature, cost-effective technology for mass-market products. |

| Best For… | AI training & inference, high-performance computing (HPC), data centers, supercomputers. | Gaming, consumer graphics cards, and professional workstations. |

In short, the choice is a trade-off.

For the consumer gaming market, GDDR6 offers fantastic performance at a price point that makes sense. In the cutting-edge realm of AI and supercomputing, performance is crucial. Cost becomes a secondary concern. Here, the massive memory bandwidth of High Bandwidth Memory is essential. Its efficiency is not just an advantage; it’s a necessity.

3. Why is HBM so important for AI?

So, we know High Bandwidth Memory is incredibly fast. But why is HBM so important for AI specifically? The answer comes down to one simple fact: Large AI models are insatiably “data-hungry.”

When training a massive model like ChatGPT, the process involves constant shuffling. Trillions of parameters are moved in and out of the GPU’s memory. These parameters can be thought of as tiny data points or learned knowledge. The AI processor (the GPU) performs a calculation, needs a new set of data, fetches it from memory, performs the next calculation, and repeats this cycle billions of times.

3.1. The memory bottleneck problem

With traditional GDDR memory, that narrow “road” we talked about becomes a massive performance bottleneck.

Imagine a state-of-the-art, hyper-efficient factory (the powerful AI GPU) capable of producing a thousand products per minute. But what if it’s only being supplied with raw materials by a single, small delivery truck that’s stuck in traffic? The factory will spend most of its time sitting idle, waiting for the next delivery.

This is exactly what happens with AI workloads and slower memory. The multi-thousand-dollar GPU, the most powerful part of the system, is forced to wait, starving for data. As a performance analyst, this is the worst-case scenario – an expensive asset being massively underutilized.

3.2. HBM solves the bottleneck

High Bandwidth Memory solves this problem by replacing the small delivery truck with a 20-lane superhighway.

HBM’s massive memory bandwidth allows the GPU to be fed a constant, enormous stream of data, ensuring it is always working at or near its maximum capacity. This has a DRAMatic, real-world impact:

- It drastically reduces the time it takes to train large AI models.

- It enables the creation of bigger, more complex AI models that would be impossible with slower memory.

- It makes AI inference (the process of using a trained model) much faster and more efficient.

In the realm of AI, performance is gauged by the speed of data processing, which is limited by data access rates. High Bandwidth Memory is essential for unlocking the transformative potential of modern AI chips.

4. The evolution of HBM: From HBM2 to HBM3 and beyond

High Bandwidth Memory isn’t a single, static technology. It’s a constantly evolving industry standard, with each new generation pushing the boundaries of performance. This evolution is governed by JEDEC, an independent body that sets the standards for the semiconductor industry, ensuring that memory from one manufacturer will work with a processor from another.

As someone who follows these developments closely, watching the leap in performance from one generation to the next is always astounding. Here’s a quick overview of the key milestones.

- HBM2/HBM2E: This was the generation that truly brought HBM into the mainstream for high-end data center GPUs and supercomputers. Introduced around 2016, HBM2 offered a massive leap in bandwidth over the GDDR5 memory used at the time. The latter “Extended” version, HBM2E, further increased the speed and capacity, solidifying its place in high-performance computing.

- High Bandwidth Memory 3 (HBM3): The current standard, which became widely available around 2022. HBM3 was a monumental step forward, essentially doubling the bandwidth of HBM2E and significantly increasing the potential memory capacity. This generation became a critical component for the explosion of large language models (LLMs), as it’s the memory used in flagship AI accelerators like the NVIDIA HBM-powered H100 GPU.

- HBM3E and Beyond: The race for speed never stops. The newest evolution, HBM3E (“Extended”), is just now hitting the market, promising even faster data transfer rates and higher densities. Looking ahead, the industry, led by the major HBM manufacturers, is already deep in the development of HBM4. This next generation will likely involve even more sophisticated 3D-stacked designs and architectural changes to continue feeding the insatiable data demands of future AI models.

Each generation represents a critical step in ensuring the memory can keep pace with the ever-increasing power of the processors it serves.

Read more:

5. Who makes HBM, and what’s next?

The incredible complexity of manufacturing 3D-stacked memory means that the market for High Bandwidth Memory is an exclusive club. While hundreds of companies design chips, only a handful have the immense technical expertise and capital investment needed to produce this cutting-edge technology at scale.

5.1. The key players: A three-horse race

As of 2025, the list of HBM manufacturers is dominated by three main semiconductor giants:

- SK Hynix: A South Korean company that has established itself as an early leader, particularly in the latest generations like HBM3 and HBM3E.

- Samsung: Another South Korean titan, Samsung is a major force in all types of memory and is aggressively competing with SK Hynix for market leadership.

- Micron: An American company, Micron is the third major player, investing heavily to catch up and innovate in the HBM space.

As a market analyst, I watch these three companies intensely. The race to produce the fastest and most power-efficient HBM stacks is crucial. It is one of the most critical competitions in the tech industry. The outcome directly impacts the future of AI.

5.2. The GPUs that use it

You won’t find High Bandwidth Memory in your average laptop. This premium memory is reserved for the most powerful data center processors.

- NVIDIA HBM technology is a cornerstone of their world-leading AI accelerators, from the A100 to the current H100 and upcoming B100 GPUs.

- AMD HBM technology has been a key feature in their high-end Instinct MI-series GPUs, which are designed for high-performance computing (HPC) and AI workloads.

5.3. What’s next? Processing-in-memory (PIM)

The future of High Bandwidth Memory is not just about getting faster – it’s about getting smarter. The next major leap is a technology called Processing-in-Memory (PIM).

The idea is to integrate small processing units directly into the memory stack itself. This would allow some simple calculations to happen right where the data is stored, without needing to move it back and forth to the main GPU. In our skyscraper analogy, it’s like putting a small mail-sorting office on every floor instead of sending every single letter down to the main lobby to be sorted. This HBM-PIM technology could further reduce data movement, save power, and revolutionize the efficiency of AI computing.

6. FAQ about the High Bandwidth Memory

High Bandwidth Memory is a cutting-edge technology, so it’s natural to have a few questions. Here are clear, direct answers to the most common ones we hear.

What is High Bandwidth Memory used for?

It’s used for the most demanding data-intensive tasks on the planet, such as training large AI models, running simulations in high-performance computing (HPC), and powering massive data centers and supercomputers.

What does a higher memory bandwidth mean?

A higher memory bandwidth means more data can be moved between the memory and the processor simultaneously. Think of it as a wider highway: more cars (data) can travel at the same time, which is crucial for preventing performance bottlenecks with powerful processors.

Is HBM better than DDR?

For its specific job, yes, HBM is vastly superior to the DDR RAM in your PC. However, they are designed for different tasks. HBM’s high cost and complexity make it ideal for specialized HBM memory GPU accelerators, while DDR is the perfect, cost-effective solution for a computer’s main system memory.

Will my gaming PC have HBM?

It’s unlikely for now. HBM is still significantly more expensive to manufacture than the GDDR6 or GDDR7 memory used on consumer graphics cards. For gaming, high-end GDDR provides more than enough memory bandwidth for an excellent experience at a much more affordable price.

What is High Bandwidth Memory (HBM) for GPUs and AI?

A silicon interposer is a special silicon base that sits between the HBM stack and the main processor (GPU). Because the connections are so dense and tiny, they can’t be soldered directly to a normal circuit board, so the interposer acts as a high-precision bridge.

What is a silicon interposer?

A silicon interposer is a special silicon base that sits between the HBM stack and the main processor (GPU). Because the connections are so dense and tiny, they can’t be soldered directly to a normal circuit board, so the interposer acts as a high-precision bridge.

What is the difference between HBM and stacked DRAM?

Stacked DRAM is the general technological concept of stacking memory chips vertically. HBM is the specific industry standard (defined by JEDEC) that dictates how those stacked chips must operate to achieve high bandwidth and interoperability.

7. Conclusion

In the end, the answer to “what is High Bandwidth Memory?” is simple: it’s the architectural difference between a sprawling suburb of townhouses and a sleek, hyper-efficient skyscraper. It represents a fundamental shift in how we design and build the engines of computation.

Here are the key takeaways from our analysis:

- HBM is a 3D-stacked memory that provides massive bandwidth in a small, power-efficient package.

- It is fundamentally different from GDDR, which is a 2D, planar technology.

- HBM is the critical enabler of the AI revolution, feeding data to powerful GPUs at unprecedented speeds.

- While too expensive for most consumer products, it is the undisputed king of memory for data centers and supercomputers.

As AI continues to shape our world, the demand for this remarkable technology will only grow. HBM isn’t just a component; it’s a foundational pillar of the future of computing. Understanding the hardware that powers the internet is key to digital literacy, and for simpler explanations of complex tech topics, explore the Privacy & Security Basics library of

Safelyo.