Think of the Bandwidth-Delay Product as the secret answer to a frustrating, common question: “Why is my internet connection slow when I’m downloading a large file from a server across the ocean, even though I pay for super-fast gigabit speeds?”

In today’s globally connected world, understanding the true nature of efficiency is essential. A high-speed connection doesn’t always guarantee high performance over long distances, and the reason often lies in this fundamental networking concept.

If you’re wondering why your high-speed internet feels slow during long-distance downloads or VPN use, here is the essential breakdown of the Bandwidth-Delay Product (BDP):

- What is BDP?

→ It measures the maximum amount of data that can be “in-flight” on your network at any given moment, effectively representing the total volume of your connection pipe. - The Golden Formula

→ Calculate it using BDP = Bandwidth (bps) × Round Trip Time (seconds) to see how much data is required to fully saturate your link. - The “Water Pipe” Analogy

→ Think of bandwidth as the pipe’s width and delay as its length. If you don’t send enough water (data) to fill the volume (BDP), the flow remains a trickle regardless of the pipe’s size. - Why high speed isn’t enough

→ On “LFNs” (long distance + high bandwidth), a small TCP window size creates a bottleneck, preventing you from reaching top speeds. - The Solution

→ Modern systems use TCP window scaling to automatically increase the data chunk size, ensuring the pipe stays full for optimal performance on VPNs and cloud backups.

As a network performance analyst who has spent years diagnosing these “mysterious” slowdowns, I’ve seen it countless times how a massive internet pipe can go underutilized. Understanding what BDP is in networking isn’t just for engineers; it’s the key to unlocking why your high-speed connection might be underperforming and what can be done about it.

Don’t let distance dictate your download speeds. Let me demystify this crucial element of efficiency.

1. What is the bandwidth-delay product?

To truly understand the bandwidth-delay product, the best analogy I’ve ever used with students and colleagues is a simple water pipe. It instantly clarifies why a connection with massive potential can sometimes underperform.

Bandwidth: The pipe's width

First, think of your network connection’s bandwidth as the width or diameter of a water pipe. This is straightforward: a massive fire hose (high bandwidth) can obviously carry far more water at once than a tiny garden hose (low bandwidth). It represents the total data link capacity, defining the maximum data rate your hardware can physically support.

Delay / RTT: The pipe's length

Next, envision the delay, referred to as Round Trip Time (RTT), as the length of a pipe. The RTT resembles the time it takes for a drop of water to travel from your end to its target and back.

When it arrives, the “splash” signal returns to confirm receipt. A short pipe, such as a connection to a local server, results in a low RTT, while a long pipe, like connecting to a server on another continent, results in a high RTT.

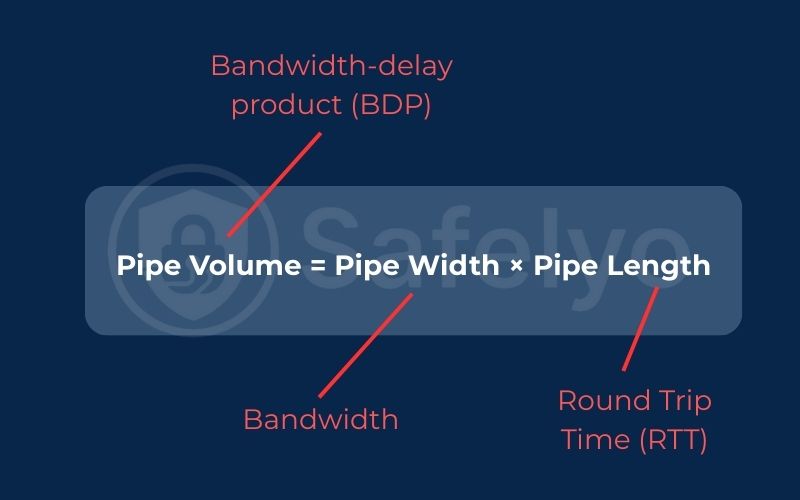

Bandwidth-delay product: The pipe's volume

Now, we put it together. The bandwidth-delay product (BDP), then, is simply the total volume of the pipe.

Pipe Volume = Pipe Width × Pipe Length

It represents the maximum amount of data (or water) that can be “in-flight” inside the network pipe at any single moment. A short, wide pipe might have the same volume as a long, narrow pipe.

Why is this "volume" the key to TCP performance

So, why does this pipe volume matter? This matters because TCP is a connection-oriented protocol designed to ensure the reliable delivery of every single packet sent across the wire. This is where it connects to how your computer actually sends files using protocols like the Transmission Control Protocol (TCP).

Your computer doesn’t just spray data into the pipe; it breaks files into data segments to avoid the inefficiency of older stop-and-wait protocols. This “chunk size” is known as the TCP window size. After sending a chunk, it waits for an acknowledgment from the other end before sending the next one.

Now, picture sending a small cup of water (representing a small TCP window size) into a very long, wide pipe (indicating a high BDP network). What happens? You must wait a long time for that cup to emerge at the other end and for the confirmation to return before sending the next one. The pipe remains mostly empty, resulting in a trickle of flow.

This is the core reason why a high-bandwidth connection can still feel incredibly slow over long distances. Your connection isn’t being fully utilized because the sender is spending more time waiting than sending. To get the fastest, most continuous flow, the chunk of data you send must be large enough to fill the entire pipe.

2. How to calculate the bandwidth-delay product

Now that we understand the “pipe volume” concept, let’s look at how to calculate the Bandwidth-Delay Product using real numbers. The math is surprisingly simple. Understanding these basic TCP networking parameters is the first step to optimizing your connection speed.

BDP (in bits) = Bandwidth (in bits per second) × RTT (in seconds)

The Bandwidth-Delay Product formula

The only tricky part is making sure your units are consistent. Let’s walk through the BDP calculation process step-by-step.

Step 1: Find your bandwidth

This is the advertised speed of your network connection, for example, a home internet plan of 100 Mbps or a server with a 1 Gbps port. Remember to convert it to bits per second for the calculation (1 Mbps = 1,000,000 bps).

Step 2: Find your round-trip time (RTT)

This is the “delay” part of the equation. The easiest way to find your RTT to a specific server is by using the ping command.

- On Windows, open Command Prompt.

- On macOS or Linux, open the Terminal.

Type ping followed by the address of the server you want to test.

For example, if I’m testing my connection to a server in Japan, I might use ping iij.ad.jp. The command will send a few packets and show you the time it took for each round trip in milliseconds (ms). You’ll want to use the average time.

Step 3: Calculate!

Now, just plug the numbers into the formula, making sure to convert your RTT from milliseconds to seconds (1 ms = 0.001 s).

Example Calculation:

- Bandwidth: 100 Mbps (or 100,000,000 bps)

- RTT: 80 ms (or 0.080 s)

- BDP = 100,000,000 bps × 0.080 s = 8,000,000 bits

To make this number more useful, we convert it from bits to bytes (by dividing by 8):

8,000,000 bits / 8 = 1,000,000 Bytes, which is equal to 1 Megabyte (MB).

This result tells us that to “fill the pipe” on this specific connection, our TCP window size needs to be at least 1 MB.

3. Why the BDP is so important: Long fat networks (LFNs)

Now that we know how to calculate the bandwidth-delay product, we can discuss specific environments. In these environments, this calculation becomes more than a theoretical exercise. It becomes a critical performance factor.

The importance of bandwidth-delay product becomes most apparent in what network engineers call LFNs. This isn’t an insult; it’s a wonderfully descriptive technical term for any network circuit that has both:

- High Bandwidth (it’s “Fat”): The pipe is very wide, capable of carrying a large volume of data (e.g., 1 Gbps or higher).

- High Delay (it’s “Long”): The round-trip time is significant, usually due to vast physical distances.

Examples of an LFN:

- Transcontinental Fiber Optic Links: This is the classic example. When I’m in my office in New York, I may need to transfer a large file. The destination is a server in a Tokyo data center. This connection is a long, fat network. The bandwidth is massive (multiple gigabits), but the RTT is also high (often 150-200ms) simply because of the speed of light and the distance the signal has to travel.

- Geostationary Satellite Connections: Satellite internet is another perfect example. The signal has to travel thousands of miles up to a stationary satellite and back down, resulting in a very high RTT (500ms or more).

In these LFNs, the “volume” of the pipe (the BDP) is enormous. If the TCP window size is not properly configured, it can lead to issues. Both the sending and receiving computers must have a window size at least as large as the BDP. Otherwise, the connection will be severely underutilized. It’s like having a superhighway with a one-car-at-a-time rule.

Without specific protocol tuning, you will never achieve the peak throughput you are paying for, and your file transfers will feel mysteriously slow.

>> Read more:

4. The solution: TCP window size and window scaling

So, we’ve identified the problem: In an LFN, the pipe’s volume (the BDP) is huge. How, then, do we send a large enough “chunk” of data to actually fill it and achieve optimum throughput? This is where a core feature of the Transmission Control Protocol (TCP) comes into play.

4.1. The original TCP window size limit

When TCP was first designed decades ago, networks were slow and simple. The protocol included a 16-bit field for the TCP window size. This limitation meant that the maximum size of a “chunk” of data was 65,535 bytes. This is roughly equivalent to about 64 Kilobytes. Originally, this small limit was part of congestion control mechanisms like slow start and congestion avoidance to prevent overflowing limited router buffers and causing global synchronization issues.

In those early days, this was more than enough. But for a modern LFN, a 64 KB window size is like trying to fill a fire hose with a teaspoon. As a network engineer, I can tell you it’s completely inadequate and would be a massive bottleneck.

For example, on a 1 Gbps connection with a 100ms RTT, the BDP is about 12.5 MB. A 64 KB window would utilize less than 1% of the available bandwidth!

4.2. The solution: The TCP window scaling option

To solve this critical limitation, the TCP window extension option was introduced as an extension to the protocol (defined in RFC 1323). This is one of the most important innovations in the history of TCP.

It’s a clever feature that works during the initial “three-way handshake” when two computers first establish a connection.

- Both the client and the server tell each other, “Hey, I support window scaling.”

- If both agree, they can then use an additional “scale factor” to multiply the original 16-bit window size value.

- This allows the effective TCP window size to be scaled up to a much larger value – up to a maximum of 1 Gigabyte.

This gives the sender the ability to put a massive amount of data “in-flight,” effectively “filling the pipe” of even the longest and fattest networks.

The Bottom Line

- Properly calculating your bandwidth-delay product is the first step.

- The second, and more important, step is ensuring that the operating systems on both ends leverage self-tuning algorithms to use the TCP window scale option automatically.

This is the key that unlocks the true speed potential of any long-distance, high-bandwidth connection and is fundamental to modern TCP tuning.

5. How BDP affects you (even if you’re not a network engineer)

At this point, you might be thinking, “This is fascinating, but I’m not a network engineer configuring TCP stacks. How does this actually affect me?”

The answer is: The bandwidth-delay product has a significant, often invisible, impact on the performance of many common online activities, especially those involving long distances. Understanding it helps you diagnose problems that might otherwise seem baffling.

Slow international file transfers

This is the classic, real-world symptom of a BDP mismatch. Have you ever tried to download a large multi-gigabyte file from a server in another country and found it surprisingly slow, even though you have a super-fast gigabit internet plan?

I’ve seen this happen countless times. Your connection is an LFN, and a small TCP window size on either your end or, more commonly, the server’s end is creating a bottleneck. The server sends a small chunk of data, waits for your computer’s acknowledgment to travel all the way back across the ocean, and only then sends the next small chunk. The result is a slow, inefficient transfer that never gets close to your maximum download speed.

Cloud backup performance

When you’re backing up hundreds of gigabytes of photos or documents, you’re using remote cloud storage. Providers like Backblaze or iDrive often have servers in different states or even different countries. The BDP of that long-distance connection will be a major factor in determining the maximum upload speed you can achieve and, therefore, how long your initial backup will take.

VPN performance

This is especially relevant for Safelyo readers. When you connect to a VPN server on the other side of the world to access geo-restricted content, you are intentionally creating a long, fat network. The bandwidth is high, and the round-trip delay time is high because your traffic is being rerouted.

This is a key reason why some VPNs feel faster than others over long distances. A high-quality VPN that uses modern protocols (like WireGuard) and runs on powerful, properly tuned servers can better handle these high-BDP connections. They can maintain a larger effective TCP window size, resulting in a much better and more consistent streaming or browsing performance for you, the end-user.

>> Read more:

6. FAQ about bandwidth-delay product

The Bandwidth-Delay Product is a technical concept, but the questions surrounding it are often very practical. Here are direct answers to the most common queries.

What is the bandwidth-delay product?

The Bandwidth-Delay Product (BDP) is the maximum amount of data that can be ‘in-flight’ on a network connection at any given moment. In simple terms, think of it as the total ‘volume’ of your network pipe, combining both its width (bandwidth) and its length (delay).

How do you calculate the bandwidth-delay product?

You use the Bandwidth-Delay Product formula: BDP = Bandwidth × RTT. First, find your bandwidth from your internet plan (e.g., 100 Mbps). Second, find your Round-Trip Time (RTT) by using the ping command to a remote server. Finally, multiply the two values, ensuring your units are consistent (bits per second and seconds).

What is the difference between bandwidth delay and latency?

Latency (or RTT) is only the ‘delay’ part of the Bandwidth-Delay Product. BDP is not the delay itself; it’s a calculation that combines both your bandwidth (capacity) and your latency (delay) to measure the network’s total ‘in-flight’ capacity.

How to find bandwidth delay?

You find the Bandwidth-Delay Product by first finding its two components: 1. Find your Bandwidth from your internet plan (e.g., 100 Mbps). 2. Find your Delay (RTT) by using the ping command to a remote server. Then, you simply multiply them together using the formula.

Do I need to manually change my TCP window size?

Almost never. Modern operating systems (Windows, macOS, Linux) have sophisticated self-monitoring algorithms and auto-tuning features that automatically adjust the TCP window size for you based on network conditions. Understanding BDP is primarily for diagnosing problems, not for manual tuning by end-users.

7. Conclusion

The Bandwidth-Delay Product simply tells you the “volume” of your network pipe. Understanding this concept is the key to unlocking the mystery of why some high-bandwidth connections can still feel slow over long distances. It’s a fundamental principle that governs the efficiency of data transfer across the global internet.

You don’t need to be a network engineer to appreciate the BDP. Knowing it exists helps you understand the true nature of internet performance and diagnose connection problems far more effectively. Understanding network fundamentals is a core aspect of digital literacy. For deeper insights into the technologies that ensure a secure and private internet, explore the Privacy & Security Basics library.